1. MHPC Hardware laboratory

1.1. Local hardware available to each group

Each group has one SunFire X4100 server, which will be used as master node, and one chassis containing two compute nodes. Most groups will have to share Ethernet switches. We have configured them to give each group isolated VLANs. Groups of 4 ports are in the same VLAN. One VLAN should be used for the IPMI network, another for the SSH network.

3x green Ethernet cables (for IPMI network)

3x yellow Ethernet cables (for SSH network)

1x blue Ethernet cable (for Internet uplink)

1x Infiniband cable

1.2. Starting Point

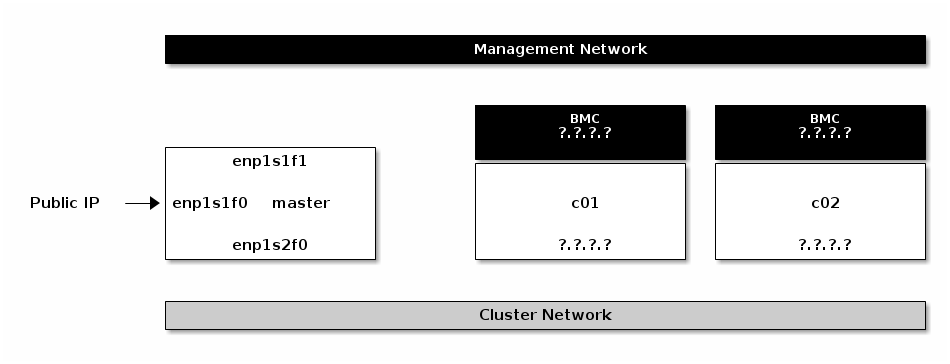

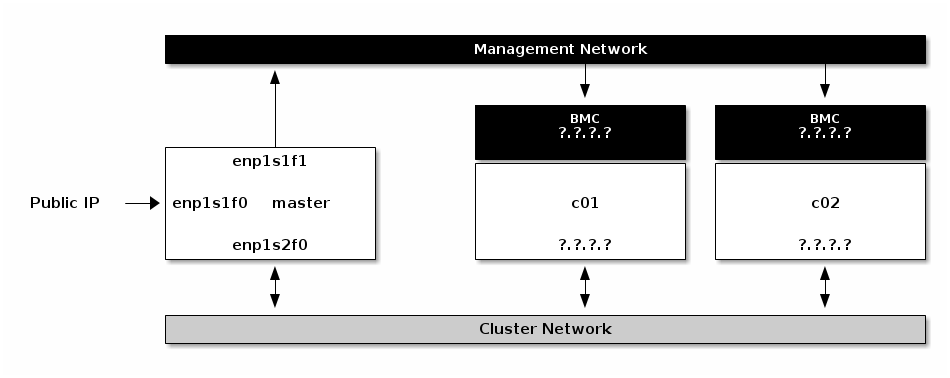

Each group starts out with bare metal hardware. One master node and two compute nodes. To save time, each master node has been installed for you with a minimal AlmaLinux setup. Master nodes can access the outside world via their first ethernet port (enp1s1f0).

Note

The interfaces names are assigned automatically by systemd/udev v197 and older, and therefore they might be different in your system.

For instance, em[1-4] could be eno[1-4].

For further information about the naming scheme, please follow this link.

Starting Point

1.3. Connect master to outside network

Start by connecting port 0 of the master node to the outside network on port 1 on the lab wall (the first port from the left).

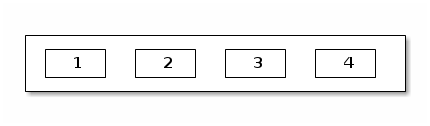

Wall ports in MHPC computer lab

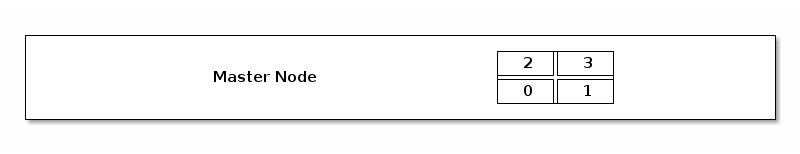

Back side of master node (SunFire X4100)

1.4. Connect all ports to the correct switch ports

Each group has one network switch, each configured with multiple VLANs. This effectively gives you two switches.

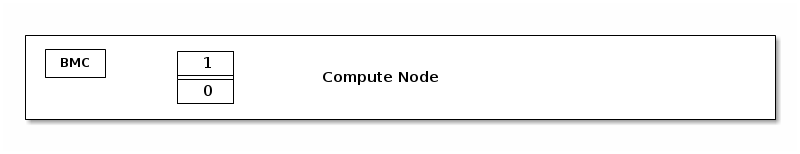

We use one VLAN/switch to create a network for IPMI. This will allow us to remote control compute nodes from master. Master should be connected from port 1 to this first VLAN/switch. The BMC of each compute node should be connected to the same network.

Back side of compute node

The other VLAN will be used for SSH access. Master should be connected to this second VLAN/switch with port 2. Use port 0 of each compute node to connect it this second network.

Port on Master |

Port on Compute Node |

Network |

|---|---|---|

0 |

N/A |

First network plug on wall (1, 5, 9, 13, 17, or 21) |

1 |

BMC |

Management (IPMI) Network |

2 |

0 |

Cluster (SSH) Network |

Cluster Connected